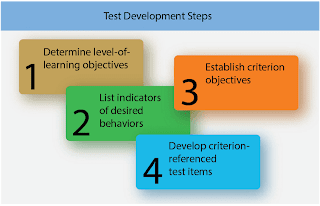

When deciding how to assess learner progress, aviation instructors can follow a four-step process.

- Determine level-of-learning objectives.

- List indicators of desired behaviors.

- Establish criterion objectives.

- Develop criterion-referenced test items.

This process is useful for tests that apply to the cognitive and affective domains of learning, and also can be used for skill testing in the psychomotor domain. The development process for criterion-referenced tests follows a general-to-specific pattern. [Figure]

|

| The development process for criterion-referenced tests follows a general-to-specific pattern |

Instructors should be aware that authentic assessment may not be as useful as traditional assessment in the early phases of training, because the learner does not have enough information about the concepts or knowledge to participate fully. As discussed in The Learning Process, when exposed to a new topic, learners first tend to acquire and memorize facts. As learning progresses, they begin to organize their knowledge to formulate an understanding of the things they have memorized. When learners possess the knowledge needed to analyze, synthesize, and evaluate (i.e., application and correlation levels of learning), they can participate more fully in the assessment process.

Determine Level-of-Learning Objectives

The first step in developing an appropriate assessment is to state the individual objectives as general, level-of-learning objectives. The objectives should measure one of the learning levels of the cognitive, affective, or psychomotor domains described in The Learning Process. The levels of cognitive learning include knowledge, comprehension, application, analysis, synthesis, and evaluation.

For the understanding level, an objective could be stated as, “Describe how to perform a compression test on an aircraft reciprocating engine.” This objective requires a learner to explain how to do a compression test, but not necessarily perform a compression test (application level). Further, the learner would not be expected to compare the results of compression tests on different engines (application level), design a compression test for a different type of engine (correlation level), or interpret the results of the compression test (correlation level). A general level-of-learning objective is a good starting point for developing a test because it defines the scope of the learning task.

List Indicators/Samples of Desired Behaviors

The second step is to list the indicators or samples of behavior that give the best indication of the achievement of the objective. The instructor selects behaviors that can be measured and which give the best evidence of learning. For example, if the instructor expects the learner to display the understanding level of learning on compression testing, some of the specific test question answers should describe appropriate tools and equipment, the proper equipment setup, appropriate safety procedures, and the steps used to obtain compression readings. The overall test must be comprehensive enough to give a true representation of the learning to be measured. It is not usually feasible to measure every aspect of a level of learning objective, but by carefully choosing samples of behavior, the instructor can obtain adequate evidence of learning.

Establish Criterion Objectives

The next step in the test development process is to define criterion (performance-based) objectives. In addition to the behavior expected, criterion objectives state the conditions under which the behavior is to be performed, and the criteria that must be met. If the instructor developed performance-based objectives during the creation of lesson plans, criterion objectives have already been formulated. The criterion objective provides the framework for developing the test items used to measure the level of learning objectives. In the compression test example, a criterion objective to measure the understanding level of learning might be stated as, “The learner will demonstrate understanding of compression test procedures for reciprocating aircraft engines by completing a quiz with a minimum passing score of 70 percent.”

Develop Criterion-Referenced Assessment Items

The last step is to develop criterion-referenced assessment items. The development of written test questions is covered in the reference section. While developing written test questions, the instructor should attempt to measure the behaviors described in the criterion objective(s). The questions in the exam for the compression test example should cover all of the areas necessary to give evidence of understanding the procedure. The results of the test (questions missed) identify areas that were not adequately covered.

Performance-based objectives serve as a reference for the development of test items. If the test is the pre-solo knowledge test, the objectives are for the learner to understand the regulations, the local area, the aircraft type, and the procedures to be used. The test should measure the learner’s knowledge in these specific areas. Individual instructors should develop their own tests to measure the progress of their learners. If the test is to measure the readiness of a learner to take a knowledge test, it should be based on the objectives of all the lessons the learner has received.

The objectives in the ACS/PTS ensure the certification of pilots and maintenance technicians at a high level of performance and proficiency, consistent with safety. The ACS/PTS for aeronautical certificates and ratings include areas of operation and tasks that reflect the requirements of the FAA publications mentioned above. Areas of operation define phases of the practical test arranged in a logical sequence within each standard. They usually begin with preflight preparation and end with postflight procedures. Tasks are titles of knowledge areas, flight procedures, or maneuvers appropriate to an area of operation. Included are references to the applicable regulations or publications. Private pilot applicants are evaluated in all tasks of each area of operation. Flight instructor applicants are evaluated on one or more tasks in each area of operation. In addition, certain tasks are required to be covered and are identified by notes immediately following the area of operation titles.

Since evaluators may cover every task in the ACS/PTS on the practical test, the instructor should evaluate all of the tasks before recommending the maintenance technician or pilot applicant for the practical test. While this evaluation is not necessarily formal, it should adhere to criterion-referenced testing.